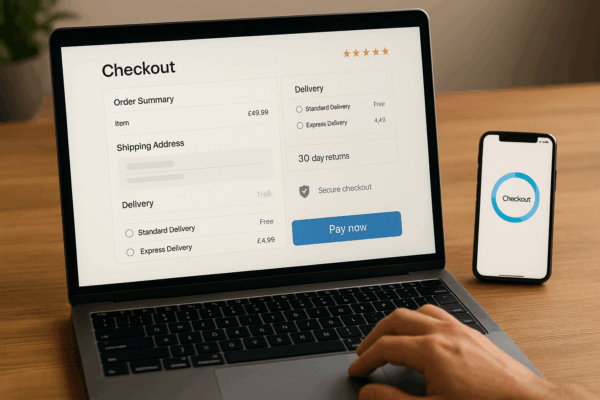

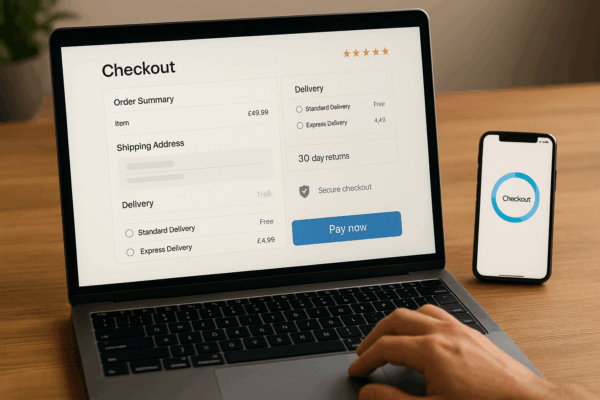

Building Confidence and Guiding Customers to Checkout

Conversion is not a trick. It is the sum of small, respectful moments that reduce doubt and increase clarity.

If you run WordPress, you’ll know that sometimes you need to put up barriers. Not to people, but to bots.

Some bots are useful — like Googlebot indexing your content — but others are less welcome. AI crawlers, for instance, are busy vacuuming up the internet to feed large language models. And when you’re rebuilding or rebranding, you may not want any search engine crawling half-finished work. On top of that, there are times when your site just needs to go dark briefly for maintenance.

Instead of juggling half a dozen separate plugins and hand-editing your robots.txt file, you can manage it all in one place.

1. Block AI crawlers

Flip a switch and the plugin will add Disallow: / for the big AI user agents (GPTBot, OAI-SearchBot, Claude, Perplexity, Meta’s scrapers and more). It also sends the right X-Robots-Tag headers to signal “noai” and “noimageai” to anyone listening.

2. Block search bots (temporarily)

Redesigning, restyling, or quietly working on content that’s not ready to be public? Enable “Block Search Bots” and the plugin lists every major crawler individually — Googlebot, Bingbot, Baiduspider, Yandex, Applebot, and dozens more. You can even add your own bots or set a crawl-delay for the ones that honour it.

3. Maintenance mode made easy

Sometimes the site needs to go offline for a short while. With Maintenance Mode on, visitors see a clean 503 page with your own message and an optional Retry-After. Logged-in admins can still access the site normally, so you can work while the public waits.

Privacy: You choose whether AI companies can use your content.

Control: Pause search indexing while you redevelop, without risking half-built pages in Google.

Reliability: A built-in maintenance mode keeps things tidy during updates or migrations.

Add extra bots by name (one per line).

Preview your robots.txt output right inside WordPress.

Filters available for developers to extend further.

This plugin is a small but mighty toolkit: it blocks who you don’t want, when you don’t want them, and gives you a proper maintenance switch. No messy .htaccess edits, no separate plugins to keep track of. Just simple controls under Settings → Bot & Maintenance.

This plugin is perfect for site owners who want to protect content from AI crawlers, pause search indexing during a rebuild, and handle maintenance cleanly — all in one plugin.

Grab the plugin here: Asporea Bots Maintenance Manager

Author: Asporea Digital Pty. Ltd.

Compatibility: Works with any modern WordPress site

Disclaimer: This plugin is provided free of charge. It is licensed under the GNU GPL v2 or later. Use it at your own risk. Test on staging and keep reliable backups. We do not provide support or updates unless agreed as a paid service. Nothing here limits your rights under the Australian Consumer Law.

Your blog posts, pricing pages and support docs are valuable. Letting third parties harvest them reduces your differentiation.

Robots.txt and header directives are broadly respected by reputable vendors. It is not perfect, but it is a professional baseline.

You can always add hard blocking at the edge later using your WAF or CDN. Start with signals, add enforcement as needed.

Adds robots.txt rules for major AI crawlers: OpenAI, Google-Extended, Applebot-Extended, Anthropic, Perplexity, Meta and more.

Sends X-Robots-Tag: noai, noimageai, SPC on HTML pages to reinforce your policy.

Warns you if a physical robots.txt file exists so you can paste the same rules there.

Includes a Tools screen that lists which bots are being told to back off.

The code is lightweight, transparent and built the way we build our client tools. If you prefer, we can install and verify it for you.

Visit yourdomain.com/robots.txt and you will see Disallow rules for the AI crawlers.

Run curl -I yourdomain.com and you will see the X-Robots-Tag header.

Over time, you should see fewer AI user agents visiting normal content in your logs. Robots hits are fine. Content hits should taper.

Robots.txt is voluntary. Reputable players usually comply. If you want enforcement rather than signals, add a firewall rule that blocks those user agents or challenge them.

This does not remove anything previously collected. It sets your policy from now on.

You control the list. Add or remove user agents with a simple filter.

Need a hand setting it up or folding it into your existing performance and SEO stack? We can do it for you. Want a custom policy banner for your legal pages and API responses? We can add that too. Ready to protect your content advantage? Talk to us at Asporea Digital. We build practical tools that keep you in control.

Did you enjoy this read? Release Notes is a newsletter that lands in your inbox once a month with one focused idea, a quick how to, and a tiny check to measure progress. Subscribe to get a monthly note focused on better site management, optimised websites and steps you can take to make your site more secure.

Short reads, real results.

Conversion is not a trick. It is the sum of small, respectful moments that reduce doubt and increase clarity.

If your business won’t sustain itself through a medium-term of lockdowns and disruption then consider what will.

Accessibility is important in website design because it ensures that all users can access and use a website, promotes inclusivity, improves the user experience, and

[asporea_chat]